forked from open-webui/open-webui

commit

0ddb2b320b

107 changed files with 5981 additions and 1976 deletions

1

.github/FUNDING.yml

vendored

Normal file

1

.github/FUNDING.yml

vendored

Normal file

|

|

@ -0,0 +1 @@

|

|||

github: tjbck

|

||||

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

2

.github/ISSUE_TEMPLATE/bug_report.md

vendored

|

|

@ -4,7 +4,6 @@ about: Create a report to help us improve

|

|||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

# Bug Report

|

||||

|

|

@ -31,6 +30,7 @@ assignees: ''

|

|||

## Reproduction Details

|

||||

|

||||

**Confirmation:**

|

||||

|

||||

- [ ] I have read and followed all the instructions provided in the README.md.

|

||||

- [ ] I have reviewed the troubleshooting.md document.

|

||||

- [ ] I have included the browser console logs.

|

||||

|

|

|

|||

1

.github/ISSUE_TEMPLATE/feature_request.md

vendored

1

.github/ISSUE_TEMPLATE/feature_request.md

vendored

|

|

@ -4,7 +4,6 @@ about: Suggest an idea for this project

|

|||

title: ''

|

||||

labels: ''

|

||||

assignees: ''

|

||||

|

||||

---

|

||||

|

||||

**Is your feature request related to a problem? Please describe.**

|

||||

|

|

|

|||

27

.github/workflows/format-backend.yaml

vendored

Normal file

27

.github/workflows/format-backend.yaml

vendored

Normal file

|

|

@ -0,0 +1,27 @@

|

|||

name: Python CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Format Backend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

node-version:

|

||||

- latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Python

|

||||

uses: actions/setup-python@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install yapf

|

||||

- name: Format backend

|

||||

run: bun run format:backend

|

||||

22

.github/workflows/format-build-frontend.yaml

vendored

Normal file

22

.github/workflows/format-build-frontend.yaml

vendored

Normal file

|

|

@ -0,0 +1,22 @@

|

|||

name: Bun CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Format & Build Frontend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- run: bun --version

|

||||

- name: Install frontend dependencies

|

||||

run: bun install

|

||||

- name: Format frontend

|

||||

run: bun run format

|

||||

- name: Build frontend

|

||||

run: bun run build

|

||||

27

.github/workflows/lint-backend.disabled

vendored

Normal file

27

.github/workflows/lint-backend.disabled

vendored

Normal file

|

|

@ -0,0 +1,27 @@

|

|||

name: Python CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Lint Backend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

node-version:

|

||||

- latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Python

|

||||

uses: actions/setup-python@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- name: Install dependencies

|

||||

run: |

|

||||

python -m pip install --upgrade pip

|

||||

pip install pylint

|

||||

- name: Lint backend

|

||||

run: bun run lint:backend

|

||||

21

.github/workflows/lint-frontend.disabled

vendored

Normal file

21

.github/workflows/lint-frontend.disabled

vendored

Normal file

|

|

@ -0,0 +1,21 @@

|

|||

name: Bun CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Lint Frontend'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

steps:

|

||||

- uses: actions/checkout@v4

|

||||

- name: Use Bun

|

||||

uses: oven-sh/setup-bun@v1

|

||||

- run: bun --version

|

||||

- name: Install frontend dependencies

|

||||

run: bun install --frozen-lockfile

|

||||

- run: bun run lint:frontend

|

||||

- run: bun run lint:types

|

||||

if: success() || failure()

|

||||

27

.github/workflows/node.js.yaml

vendored

27

.github/workflows/node.js.yaml

vendored

|

|

@ -1,27 +0,0 @@

|

|||

name: Node.js CI

|

||||

on:

|

||||

push:

|

||||

branches: ['main']

|

||||

pull_request:

|

||||

jobs:

|

||||

build:

|

||||

name: 'Fmt, Lint, & Build'

|

||||

env:

|

||||

PUBLIC_API_BASE_URL: ''

|

||||

runs-on: ubuntu-latest

|

||||

strategy:

|

||||

matrix:

|

||||

node-version:

|

||||

- latest

|

||||

steps:

|

||||

- uses: actions/checkout@v3

|

||||

- name: Use Node.js ${{ matrix.node-version }}

|

||||

uses: actions/setup-node@v3

|

||||

with:

|

||||

node-version: ${{ matrix.node-version }}

|

||||

- run: node --version

|

||||

- run: npm clean-install

|

||||

- run: npm run fmt

|

||||

#- run: npm run lint

|

||||

#- run: npm run lint:types

|

||||

- run: npm run build

|

||||

290

.gitignore

vendored

290

.gitignore

vendored

|

|

@ -8,3 +8,293 @@ node_modules

|

|||

!.env.example

|

||||

vite.config.js.timestamp-*

|

||||

vite.config.ts.timestamp-*

|

||||

# Byte-compiled / optimized / DLL files

|

||||

__pycache__/

|

||||

*.py[cod]

|

||||

*$py.class

|

||||

|

||||

# C extensions

|

||||

*.so

|

||||

|

||||

# Distribution / packaging

|

||||

.Python

|

||||

build/

|

||||

develop-eggs/

|

||||

dist/

|

||||

downloads/

|

||||

eggs/

|

||||

.eggs/

|

||||

lib64/

|

||||

parts/

|

||||

sdist/

|

||||

var/

|

||||

wheels/

|

||||

share/python-wheels/

|

||||

*.egg-info/

|

||||

.installed.cfg

|

||||

*.egg

|

||||

MANIFEST

|

||||

|

||||

# PyInstaller

|

||||

# Usually these files are written by a python script from a template

|

||||

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

||||

*.manifest

|

||||

*.spec

|

||||

|

||||

# Installer logs

|

||||

pip-log.txt

|

||||

pip-delete-this-directory.txt

|

||||

|

||||

# Unit test / coverage reports

|

||||

htmlcov/

|

||||

.tox/

|

||||

.nox/

|

||||

.coverage

|

||||

.coverage.*

|

||||

.cache

|

||||

nosetests.xml

|

||||

coverage.xml

|

||||

*.cover

|

||||

*.py,cover

|

||||

.hypothesis/

|

||||

.pytest_cache/

|

||||

cover/

|

||||

|

||||

# Translations

|

||||

*.mo

|

||||

*.pot

|

||||

|

||||

# Django stuff:

|

||||

*.log

|

||||

local_settings.py

|

||||

db.sqlite3

|

||||

db.sqlite3-journal

|

||||

|

||||

# Flask stuff:

|

||||

instance/

|

||||

.webassets-cache

|

||||

|

||||

# Scrapy stuff:

|

||||

.scrapy

|

||||

|

||||

# Sphinx documentation

|

||||

docs/_build/

|

||||

|

||||

# PyBuilder

|

||||

.pybuilder/

|

||||

target/

|

||||

|

||||

# Jupyter Notebook

|

||||

.ipynb_checkpoints

|

||||

|

||||

# IPython

|

||||

profile_default/

|

||||

ipython_config.py

|

||||

|

||||

# pyenv

|

||||

# For a library or package, you might want to ignore these files since the code is

|

||||

# intended to run in multiple environments; otherwise, check them in:

|

||||

# .python-version

|

||||

|

||||

# pipenv

|

||||

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

||||

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

||||

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

||||

# install all needed dependencies.

|

||||

#Pipfile.lock

|

||||

|

||||

# poetry

|

||||

# Similar to Pipfile.lock, it is generally recommended to include poetry.lock in version control.

|

||||

# This is especially recommended for binary packages to ensure reproducibility, and is more

|

||||

# commonly ignored for libraries.

|

||||

# https://python-poetry.org/docs/basic-usage/#commit-your-poetrylock-file-to-version-control

|

||||

#poetry.lock

|

||||

|

||||

# pdm

|

||||

# Similar to Pipfile.lock, it is generally recommended to include pdm.lock in version control.

|

||||

#pdm.lock

|

||||

# pdm stores project-wide configurations in .pdm.toml, but it is recommended to not include it

|

||||

# in version control.

|

||||

# https://pdm.fming.dev/#use-with-ide

|

||||

.pdm.toml

|

||||

|

||||

# PEP 582; used by e.g. github.com/David-OConnor/pyflow and github.com/pdm-project/pdm

|

||||

__pypackages__/

|

||||

|

||||

# Celery stuff

|

||||

celerybeat-schedule

|

||||

celerybeat.pid

|

||||

|

||||

# SageMath parsed files

|

||||

*.sage.py

|

||||

|

||||

# Environments

|

||||

.env

|

||||

.venv

|

||||

env/

|

||||

venv/

|

||||

ENV/

|

||||

env.bak/

|

||||

venv.bak/

|

||||

|

||||

# Spyder project settings

|

||||

.spyderproject

|

||||

.spyproject

|

||||

|

||||

# Rope project settings

|

||||

.ropeproject

|

||||

|

||||

# mkdocs documentation

|

||||

/site

|

||||

|

||||

# mypy

|

||||

.mypy_cache/

|

||||

.dmypy.json

|

||||

dmypy.json

|

||||

|

||||

# Pyre type checker

|

||||

.pyre/

|

||||

|

||||

# pytype static type analyzer

|

||||

.pytype/

|

||||

|

||||

# Cython debug symbols

|

||||

cython_debug/

|

||||

|

||||

# PyCharm

|

||||

# JetBrains specific template is maintained in a separate JetBrains.gitignore that can

|

||||

# be found at https://github.com/github/gitignore/blob/main/Global/JetBrains.gitignore

|

||||

# and can be added to the global gitignore or merged into this file. For a more nuclear

|

||||

# option (not recommended) you can uncomment the following to ignore the entire idea folder.

|

||||

#.idea/

|

||||

|

||||

# Logs

|

||||

logs

|

||||

*.log

|

||||

npm-debug.log*

|

||||

yarn-debug.log*

|

||||

yarn-error.log*

|

||||

lerna-debug.log*

|

||||

.pnpm-debug.log*

|

||||

|

||||

# Diagnostic reports (https://nodejs.org/api/report.html)

|

||||

report.[0-9]*.[0-9]*.[0-9]*.[0-9]*.json

|

||||

|

||||

# Runtime data

|

||||

pids

|

||||

*.pid

|

||||

*.seed

|

||||

*.pid.lock

|

||||

|

||||

# Directory for instrumented libs generated by jscoverage/JSCover

|

||||

lib-cov

|

||||

|

||||

# Coverage directory used by tools like istanbul

|

||||

coverage

|

||||

*.lcov

|

||||

|

||||

# nyc test coverage

|

||||

.nyc_output

|

||||

|

||||

# Grunt intermediate storage (https://gruntjs.com/creating-plugins#storing-task-files)

|

||||

.grunt

|

||||

|

||||

# Bower dependency directory (https://bower.io/)

|

||||

bower_components

|

||||

|

||||

# node-waf configuration

|

||||

.lock-wscript

|

||||

|

||||

# Compiled binary addons (https://nodejs.org/api/addons.html)

|

||||

build/Release

|

||||

|

||||

# Dependency directories

|

||||

node_modules/

|

||||

jspm_packages/

|

||||

|

||||

# Snowpack dependency directory (https://snowpack.dev/)

|

||||

web_modules/

|

||||

|

||||

# TypeScript cache

|

||||

*.tsbuildinfo

|

||||

|

||||

# Optional npm cache directory

|

||||

.npm

|

||||

|

||||

# Optional eslint cache

|

||||

.eslintcache

|

||||

|

||||

# Optional stylelint cache

|

||||

.stylelintcache

|

||||

|

||||

# Microbundle cache

|

||||

.rpt2_cache/

|

||||

.rts2_cache_cjs/

|

||||

.rts2_cache_es/

|

||||

.rts2_cache_umd/

|

||||

|

||||

# Optional REPL history

|

||||

.node_repl_history

|

||||

|

||||

# Output of 'npm pack'

|

||||

*.tgz

|

||||

|

||||

# Yarn Integrity file

|

||||

.yarn-integrity

|

||||

|

||||

# dotenv environment variable files

|

||||

.env

|

||||

.env.development.local

|

||||

.env.test.local

|

||||

.env.production.local

|

||||

.env.local

|

||||

|

||||

# parcel-bundler cache (https://parceljs.org/)

|

||||

.cache

|

||||

.parcel-cache

|

||||

|

||||

# Next.js build output

|

||||

.next

|

||||

out

|

||||

|

||||

# Nuxt.js build / generate output

|

||||

.nuxt

|

||||

dist

|

||||

|

||||

# Gatsby files

|

||||

.cache/

|

||||

# Comment in the public line in if your project uses Gatsby and not Next.js

|

||||

# https://nextjs.org/blog/next-9-1#public-directory-support

|

||||

# public

|

||||

|

||||

# vuepress build output

|

||||

.vuepress/dist

|

||||

|

||||

# vuepress v2.x temp and cache directory

|

||||

.temp

|

||||

.cache

|

||||

|

||||

# Docusaurus cache and generated files

|

||||

.docusaurus

|

||||

|

||||

# Serverless directories

|

||||

.serverless/

|

||||

|

||||

# FuseBox cache

|

||||

.fusebox/

|

||||

|

||||

# DynamoDB Local files

|

||||

.dynamodb/

|

||||

|

||||

# TernJS port file

|

||||

.tern-port

|

||||

|

||||

# Stores VSCode versions used for testing VSCode extensions

|

||||

.vscode-test

|

||||

|

||||

# yarn v2

|

||||

.yarn/cache

|

||||

.yarn/unplugged

|

||||

.yarn/build-state.yml

|

||||

.yarn/install-state.gz

|

||||

.pnp.*

|

||||

|

|

@ -11,3 +11,6 @@ node_modules

|

|||

pnpm-lock.yaml

|

||||

package-lock.json

|

||||

yarn.lock

|

||||

|

||||

# Ignore kubernetes files

|

||||

kubernetes

|

||||

16

Dockerfile

16

Dockerfile

|

|

@ -2,12 +2,6 @@

|

|||

|

||||

FROM node:alpine as build

|

||||

|

||||

ARG OLLAMA_API_BASE_URL='/ollama/api'

|

||||

RUN echo $OLLAMA_API_BASE_URL

|

||||

|

||||

ENV PUBLIC_API_BASE_URL $OLLAMA_API_BASE_URL

|

||||

RUN echo $PUBLIC_API_BASE_URL

|

||||

|

||||

WORKDIR /app

|

||||

|

||||

COPY package.json package-lock.json ./

|

||||

|

|

@ -18,11 +12,13 @@ RUN npm run build

|

|||

|

||||

FROM python:3.11-slim-buster as base

|

||||

|

||||

ARG OLLAMA_API_BASE_URL='/ollama/api'

|

||||

|

||||

ENV ENV=prod

|

||||

ENV OLLAMA_API_BASE_URL $OLLAMA_API_BASE_URL

|

||||

ENV WEBUI_AUTH ""

|

||||

|

||||

ENV OLLAMA_API_BASE_URL "/ollama/api"

|

||||

|

||||

ENV OPENAI_API_BASE_URL ""

|

||||

ENV OPENAI_API_KEY ""

|

||||

|

||||

ENV WEBUI_JWT_SECRET_KEY "SECRET_KEY"

|

||||

|

||||

WORKDIR /app

|

||||

|

|

|

|||

35

INSTALLATION.md

Normal file

35

INSTALLATION.md

Normal file

|

|

@ -0,0 +1,35 @@

|

|||

### Installing Both Ollama and Ollama Web UI Using Kustomize

|

||||

|

||||

For cpu-only pod

|

||||

|

||||

```bash

|

||||

kubectl apply -f ./kubernetes/manifest/base

|

||||

```

|

||||

|

||||

For gpu-enabled pod

|

||||

|

||||

```bash

|

||||

kubectl apply -k ./kubernetes/manifest

|

||||

```

|

||||

|

||||

### Installing Both Ollama and Ollama Web UI Using Helm

|

||||

|

||||

Package Helm file first

|

||||

|

||||

```bash

|

||||

helm package ./kubernetes/helm/

|

||||

```

|

||||

|

||||

For cpu-only pod

|

||||

|

||||

```bash

|

||||

helm install ollama-webui ./ollama-webui-*.tgz

|

||||

```

|

||||

|

||||

For gpu-enabled pod

|

||||

|

||||

```bash

|

||||

helm install ollama-webui ./ollama-webui-*.tgz --set ollama.resources.limits.nvidia.com/gpu="1"

|

||||

```

|

||||

|

||||

Check the `kubernetes/helm/values.yaml` file to know which parameters are available for customization

|

||||

113

README.md

113

README.md

|

|

@ -27,12 +27,16 @@ Also check our sibling project, [OllamaHub](https://ollamahub.com/), where you c

|

|||

|

||||

- ⚡ **Swift Responsiveness**: Enjoy fast and responsive performance.

|

||||

|

||||

- 🚀 **Effortless Setup**: Install seamlessly using Docker for a hassle-free experience.

|

||||

- 🚀 **Effortless Setup**: Install seamlessly using Docker or Kubernetes (kubectl, kustomize or helm) for a hassle-free experience.

|

||||

|

||||

- 💻 **Code Syntax Highlighting**: Enjoy enhanced code readability with our syntax highlighting feature.

|

||||

|

||||

- ✒️🔢 **Full Markdown and LaTeX Support**: Elevate your LLM experience with comprehensive Markdown and LaTeX capabilities for enriched interaction.

|

||||

|

||||

- 📜 **Prompt Preset Support**: Instantly access preset prompts using the '/' command in the chat input. Load predefined conversation starters effortlessly and expedite your interactions. Effortlessly import prompts through [OllamaHub](https://ollamahub.com/) integration.

|

||||

|

||||

- 👍👎 **RLHF Annotation**: Empower your messages by rating them with thumbs up and thumbs down, facilitating the creation of datasets for Reinforcement Learning from Human Feedback (RLHF). Utilize your messages to train or fine-tune models, all while ensuring the confidentiality of locally saved data.

|

||||

|

||||

- 📥🗑️ **Download/Delete Models**: Easily download or remove models directly from the web UI.

|

||||

|

||||

- ⬆️ **GGUF File Model Creation**: Effortlessly create Ollama models by uploading GGUF files directly from the web UI. Streamlined process with options to upload from your machine or download GGUF files from Hugging Face.

|

||||

|

|

@ -79,32 +83,6 @@ Don't forget to explore our sibling project, [OllamaHub](https://ollamahub.com/)

|

|||

|

||||

- **Privacy and Data Security:** We prioritize your privacy and data security above all. Please be reassured that all data entered into the Ollama Web UI is stored locally on your device. Our system is designed to be privacy-first, ensuring that no external requests are made, and your data does not leave your local environment. We are committed to maintaining the highest standards of data privacy and security, ensuring that your information remains confidential and under your control.

|

||||

|

||||

### Installing Both Ollama and Ollama Web UI Using Docker Compose

|

||||

|

||||

If you don't have Ollama installed yet, you can use the provided Docker Compose file for a hassle-free installation. Simply run the following command:

|

||||

|

||||

```bash

|

||||

docker compose up -d --build

|

||||

```

|

||||

|

||||

This command will install both Ollama and Ollama Web UI on your system.

|

||||

|

||||

#### Enable GPU

|

||||

|

||||

Use the additional Docker Compose file designed to enable GPU support by running the following command:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yml -f docker-compose.gpu.yml up -d --build

|

||||

```

|

||||

|

||||

#### Expose Ollama API outside the container stack

|

||||

|

||||

Deploy the service with an additional Docker Compose file designed for API exposure:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yml -f docker-compose.api.yml up -d --build

|

||||

```

|

||||

|

||||

### Installing Ollama Web UI Only

|

||||

|

||||

#### Prerequisites

|

||||

|

|

@ -149,6 +127,69 @@ docker build -t ollama-webui .

|

|||

docker run -d -p 3000:8080 -e OLLAMA_API_BASE_URL=https://example.com/api -v ollama-webui:/app/backend/data --name ollama-webui --restart always ollama-webui

|

||||

```

|

||||

|

||||

### Installing Both Ollama and Ollama Web UI

|

||||

|

||||

#### Using Docker Compose

|

||||

|

||||

If you don't have Ollama installed yet, you can use the provided Docker Compose file for a hassle-free installation. Simply run the following command:

|

||||

|

||||

```bash

|

||||

docker compose up -d --build

|

||||

```

|

||||

|

||||

This command will install both Ollama and Ollama Web UI on your system.

|

||||

|

||||

##### Enable GPU

|

||||

|

||||

Use the additional Docker Compose file designed to enable GPU support by running the following command:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yaml -f docker-compose.gpu.yaml up -d --build

|

||||

```

|

||||

|

||||

##### Expose Ollama API outside the container stack

|

||||

|

||||

Deploy the service with an additional Docker Compose file designed for API exposure:

|

||||

|

||||

```bash

|

||||

docker compose -f docker-compose.yaml -f docker-compose.api.yaml up -d --build

|

||||

```

|

||||

|

||||

#### Using Provided `run-compose.sh` Script (Linux)

|

||||

|

||||

Also available on Windows under any docker-enabled WSL2 linux distro (you have to enable it from Docker Desktop)

|

||||

|

||||

Simply run the following command to grant execute permission to script:

|

||||

|

||||

```bash

|

||||

chmod +x run-compose.sh

|

||||

```

|

||||

|

||||

##### For CPU only container

|

||||

|

||||

```bash

|

||||

./run-compose.sh

|

||||

```

|

||||

|

||||

##### Enable GPU

|

||||

|

||||

For GPU enabled container (to enable this you must have your gpu driver for docker, it mostly works with nvidia so this is the official install guide: [nvidia-container-toolkit](https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/install-guide.html))

|

||||

Warning! A GPU-enabled installation has only been tested using linux and nvidia GPU, full functionalities are not guaranteed under Windows or Macos or using a different GPU

|

||||

|

||||

```bash

|

||||

./run-compose.sh --enable-gpu

|

||||

```

|

||||

|

||||

Note that both the above commands will use the latest production docker image in repository, to be able to build the latest local version you'll need to append the `--build` parameter, for example:

|

||||

|

||||

```bash

|

||||

./run-compose.sh --enable-gpu --build

|

||||

```

|

||||

|

||||

#### Using Alternative Methods (Kustomize or Helm)

|

||||

|

||||

See [INSTALLATION.md](/INSTALLATION.md) for information on how to install and/or join our [Ollama Web UI Discord community](https://discord.gg/5rJgQTnV4s).

|

||||

|

||||

## How to Install Without Docker

|

||||

|

||||

While we strongly recommend using our convenient Docker container installation for optimal support, we understand that some situations may require a non-Docker setup, especially for development purposes. Please note that non-Docker installations are not officially supported, and you might need to troubleshoot on your own.

|

||||

|

|

@ -157,9 +198,15 @@ While we strongly recommend using our convenient Docker container installation f

|

|||

|

||||

The Ollama Web UI consists of two primary components: the frontend and the backend (which serves as a reverse proxy, handling static frontend files, and additional features). Both need to be running concurrently for the development environment.

|

||||

|

||||

**Warning: Backend Dependency for Proper Functionality**

|

||||

> [!IMPORTANT]

|

||||

> The backend is required for proper functionality

|

||||

|

||||

### TL;DR 🚀

|

||||

### Requirements 📦

|

||||

|

||||

- 🐰 [Bun](https://bun.sh) >= 1.0.21 or 🐢 [Node.js](https://nodejs.org/en) >= 20.10

|

||||

- 🐍 [Python](https://python.org) >= 3.11

|

||||

|

||||

### Build and Install 🛠️

|

||||

|

||||

Run the following commands to install:

|

||||

|

||||

|

|

@ -170,13 +217,17 @@ cd ollama-webui/

|

|||

# Copying required .env file

|

||||

cp -RPp example.env .env

|

||||

|

||||

# Building Frontend

|

||||

# Building Frontend Using Node

|

||||

npm i

|

||||

npm run build

|

||||

|

||||

# or Building Frontend Using Bun

|

||||

# bun install

|

||||

# bun run build

|

||||

|

||||

# Serving Frontend with the Backend

|

||||

cd ./backend

|

||||

pip install -r requirements.txt

|

||||

pip install -r requirements.txt -U

|

||||

sh start.sh

|

||||

```

|

||||

|

||||

|

|

|

|||

|

|

@ -4,7 +4,7 @@

|

|||

|

||||

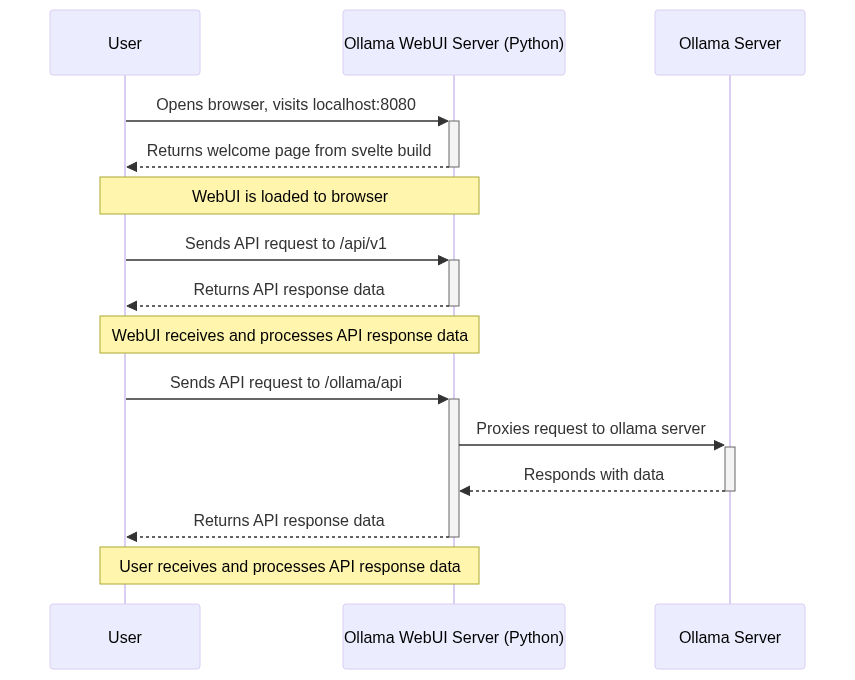

The Ollama WebUI system is designed to streamline interactions between the client (your browser) and the Ollama API. At the heart of this design is a backend reverse proxy, enhancing security and resolving CORS issues.

|

||||

|

||||

- **How it Works**: When you make a request (like `/ollama/api/tags`) from the Ollama WebUI, it doesn’t go directly to the Ollama API. Instead, it first reaches the Ollama WebUI backend. The backend then forwards this request to the Ollama API via the route you define in the `OLLAMA_API_BASE_URL` environment variable. For instance, a request to `/ollama/api/tags` in the WebUI is equivalent to `OLLAMA_API_BASE_URL/tags` in the backend.

|

||||

- **How it Works**: The Ollama WebUI is designed to interact with the Ollama API through a specific route. When a request is made from the WebUI to Ollama, it is not directly sent to the Ollama API. Initially, the request is sent to the Ollama WebUI backend via `/ollama/api` route. From there, the backend is responsible for forwarding the request to the Ollama API. This forwarding is accomplished by using the route specified in the `OLLAMA_API_BASE_URL` environment variable. Therefore, a request made to `/ollama/api` in the WebUI is effectively the same as making a request to `OLLAMA_API_BASE_URL` in the backend. For instance, a request to `/ollama/api/tags` in the WebUI is equivalent to `OLLAMA_API_BASE_URL/tags` in the backend.

|

||||

|

||||

- **Security Benefits**: This design prevents direct exposure of the Ollama API to the frontend, safeguarding against potential CORS (Cross-Origin Resource Sharing) issues and unauthorized access. Requiring authentication to access the Ollama API further enhances this security layer.

|

||||

|

||||

|

|

@ -27,6 +27,6 @@ docker run -d --network=host -v ollama-webui:/app/backend/data -e OLLAMA_API_BAS

|

|||

1. **Verify Ollama URL Format**:

|

||||

- When running the Web UI container, ensure the `OLLAMA_API_BASE_URL` is correctly set, including the `/api` suffix. (e.g., `http://192.168.1.1:11434/api` for different host setups).

|

||||

- In the Ollama WebUI, navigate to "Settings" > "General".

|

||||

- Confirm that the Ollama Server URL is correctly set to `/ollama/api`, including the `/api` suffix.

|

||||

- Confirm that the Ollama Server URL is correctly set to `[OLLAMA URL]/api` (e.g., `http://localhost:11434/api`), including the `/api` suffix.

|

||||

|

||||

By following these enhanced troubleshooting steps, connection issues should be effectively resolved. For further assistance or queries, feel free to reach out to us on our community Discord.

|

||||

|

|

|

|||

3

backend/.gitignore

vendored

3

backend/.gitignore

vendored

|

|

@ -4,4 +4,5 @@ _old

|

|||

uploads

|

||||

.ipynb_checkpoints

|

||||

*.db

|

||||

_test

|

||||

_test

|

||||

Pipfile

|

||||

|

|

@ -1,115 +1,111 @@

|

|||

from flask import Flask, request, Response, jsonify

|

||||

from flask_cors import CORS

|

||||

|

||||

from fastapi import FastAPI, Request, Response, HTTPException, Depends

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

from fastapi.responses import StreamingResponse

|

||||

from fastapi.concurrency import run_in_threadpool

|

||||

|

||||

import requests

|

||||

import json

|

||||

|

||||

from pydantic import BaseModel

|

||||

|

||||

from apps.web.models.users import Users

|

||||

from constants import ERROR_MESSAGES

|

||||

from utils.utils import extract_token_from_auth_header

|

||||

from utils.utils import decode_token, get_current_user

|

||||

from config import OLLAMA_API_BASE_URL, WEBUI_AUTH

|

||||

|

||||

app = Flask(__name__)

|

||||

CORS(

|

||||

app

|

||||

) # Enable Cross-Origin Resource Sharing (CORS) to allow requests from different domains

|

||||

app = FastAPI()

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=["*"],

|

||||

allow_credentials=True,

|

||||

allow_methods=["*"],

|

||||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

# Define the target server URL

|

||||

TARGET_SERVER_URL = OLLAMA_API_BASE_URL

|

||||

app.state.OLLAMA_API_BASE_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

# TARGET_SERVER_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

|

||||

@app.route("/", defaults={"path": ""}, methods=["GET", "POST", "PUT", "DELETE"])

|

||||

@app.route("/<path:path>", methods=["GET", "POST", "PUT", "DELETE"])

|

||||

def proxy(path):

|

||||

# Combine the base URL of the target server with the requested path

|

||||

target_url = f"{TARGET_SERVER_URL}/{path}"

|

||||

print(target_url)

|

||||

@app.get("/url")

|

||||

async def get_ollama_api_url(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

# Get data from the original request

|

||||

data = request.get_data()

|

||||

|

||||

class UrlUpdateForm(BaseModel):

|

||||

url: str

|

||||

|

||||

|

||||

@app.post("/url/update")

|

||||

async def update_ollama_api_url(

|

||||

form_data: UrlUpdateForm, user=Depends(get_current_user)

|

||||

):

|

||||

if user and user.role == "admin":

|

||||

app.state.OLLAMA_API_BASE_URL = form_data.url

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.api_route("/{path:path}", methods=["GET", "POST", "PUT", "DELETE"])

|

||||

async def proxy(path: str, request: Request, user=Depends(get_current_user)):

|

||||

target_url = f"{app.state.OLLAMA_API_BASE_URL}/{path}"

|

||||

|

||||

body = await request.body()

|

||||

headers = dict(request.headers)

|

||||

|

||||

# Basic RBAC support

|

||||

if WEBUI_AUTH:

|

||||

if "Authorization" in headers:

|

||||

token = extract_token_from_auth_header(headers["Authorization"])

|

||||

user = Users.get_user_by_token(token)

|

||||

if user:

|

||||

# Only user and admin roles can access

|

||||

if user.role in ["user", "admin"]:

|

||||

if path in ["pull", "delete", "push", "copy", "create"]:

|

||||

# Only admin role can perform actions above

|

||||

if user.role == "admin":

|

||||

pass

|

||||

else:

|

||||

return (

|

||||

jsonify({"detail": ERROR_MESSAGES.ACCESS_PROHIBITED}),

|

||||

401,

|

||||

)

|

||||

else:

|

||||

pass

|

||||

else:

|

||||

return jsonify({"detail": ERROR_MESSAGES.ACCESS_PROHIBITED}), 401

|

||||

else:

|

||||

return jsonify({"detail": ERROR_MESSAGES.UNAUTHORIZED}), 401

|

||||

else:

|

||||

return jsonify({"detail": ERROR_MESSAGES.UNAUTHORIZED}), 401

|

||||

if user.role in ["user", "admin"]:

|

||||

if path in ["pull", "delete", "push", "copy", "create"]:

|

||||

if user.role != "admin":

|

||||

raise HTTPException(

|

||||

status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED

|

||||

)

|

||||

else:

|

||||

pass

|

||||

|

||||

r = None

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

headers.pop("Host", None)

|

||||

headers.pop("Authorization", None)

|

||||

headers.pop("Origin", None)

|

||||

headers.pop("Referer", None)

|

||||

|

||||

r = None

|

||||

|

||||

def get_request():

|

||||

nonlocal r

|

||||

try:

|

||||

r = requests.request(

|

||||

method=request.method,

|

||||

url=target_url,

|

||||

data=body,

|

||||

headers=headers,

|

||||

stream=True,

|

||||

)

|

||||

|

||||

r.raise_for_status()

|

||||

|

||||

return StreamingResponse(

|

||||

r.iter_content(chunk_size=8192),

|

||||

status_code=r.status_code,

|

||||

headers=dict(r.headers),

|

||||

)

|

||||

except Exception as e:

|

||||

raise e

|

||||

|

||||

try:

|

||||

# Make a request to the target server

|

||||

r = requests.request(

|

||||

method=request.method,

|

||||

url=target_url,

|

||||

data=data,

|

||||

headers=headers,

|

||||

stream=True, # Enable streaming for server-sent events

|

||||

)

|

||||

|

||||

r.raise_for_status()

|

||||

|

||||

# Proxy the target server's response to the client

|

||||

def generate():

|

||||

for chunk in r.iter_content(chunk_size=8192):

|

||||

yield chunk

|

||||

|

||||

response = Response(generate(), status=r.status_code)

|

||||

|

||||

# Copy headers from the target server's response to the client's response

|

||||

for key, value in r.headers.items():

|

||||

response.headers[key] = value

|

||||

|

||||

return response

|

||||

return await run_in_threadpool(get_request)

|

||||

except Exception as e:

|

||||

print(e)

|

||||

error_detail = "Ollama WebUI: Server Connection Error"

|

||||

if r != None:

|

||||

print(r.text)

|

||||

res = r.json()

|

||||

if "error" in res:

|

||||

error_detail = f"Ollama: {res['error']}"

|

||||

print(res)

|

||||

if r is not None:

|

||||

try:

|

||||

res = r.json()

|

||||

if "error" in res:

|

||||

error_detail = f"Ollama: {res['error']}"

|

||||

except:

|

||||

error_detail = f"Ollama: {e}"

|

||||

|

||||

return (

|

||||

jsonify(

|

||||

{

|

||||

"detail": error_detail,

|

||||

"message": str(e),

|

||||

}

|

||||

),

|

||||

400,

|

||||

raise HTTPException(

|

||||

status_code=r.status_code if r else 500,

|

||||

detail=error_detail,

|

||||

)

|

||||

|

||||

|

||||

if __name__ == "__main__":

|

||||

app.run(debug=True)

|

||||

|

|

|

|||

127

backend/apps/ollama/old_main.py

Normal file

127

backend/apps/ollama/old_main.py

Normal file

|

|

@ -0,0 +1,127 @@

|

|||

from fastapi import FastAPI, Request, Response, HTTPException, Depends

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

from fastapi.responses import StreamingResponse

|

||||

|

||||

import requests

|

||||

import json

|

||||

from pydantic import BaseModel

|

||||

|

||||

from apps.web.models.users import Users

|

||||

from constants import ERROR_MESSAGES

|

||||

from utils.utils import decode_token, get_current_user

|

||||

from config import OLLAMA_API_BASE_URL, WEBUI_AUTH

|

||||

|

||||

import aiohttp

|

||||

|

||||

app = FastAPI()

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=["*"],

|

||||

allow_credentials=True,

|

||||

allow_methods=["*"],

|

||||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

app.state.OLLAMA_API_BASE_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

# TARGET_SERVER_URL = OLLAMA_API_BASE_URL

|

||||

|

||||

|

||||

@app.get("/url")

|

||||

async def get_ollama_api_url(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

class UrlUpdateForm(BaseModel):

|

||||

url: str

|

||||

|

||||

|

||||

@app.post("/url/update")

|

||||

async def update_ollama_api_url(

|

||||

form_data: UrlUpdateForm, user=Depends(get_current_user)

|

||||

):

|

||||

if user and user.role == "admin":

|

||||

app.state.OLLAMA_API_BASE_URL = form_data.url

|

||||

return {"OLLAMA_API_BASE_URL": app.state.OLLAMA_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

# async def fetch_sse(method, target_url, body, headers):

|

||||

# async with aiohttp.ClientSession() as session:

|

||||

# try:

|

||||

# async with session.request(

|

||||

# method, target_url, data=body, headers=headers

|

||||

# ) as response:

|

||||

# print(response.status)

|

||||

# async for line in response.content:

|

||||

# yield line

|

||||

# except Exception as e:

|

||||

# print(e)

|

||||

# error_detail = "Ollama WebUI: Server Connection Error"

|

||||

# yield json.dumps({"error": error_detail, "message": str(e)}).encode()

|

||||

|

||||

|

||||

@app.api_route("/{path:path}", methods=["GET", "POST", "PUT", "DELETE"])

|

||||

async def proxy(path: str, request: Request, user=Depends(get_current_user)):

|

||||

target_url = f"{app.state.OLLAMA_API_BASE_URL}/{path}"

|

||||

print(target_url)

|

||||

|

||||

body = await request.body()

|

||||

headers = dict(request.headers)

|

||||

|

||||

if user.role in ["user", "admin"]:

|

||||

if path in ["pull", "delete", "push", "copy", "create"]:

|

||||

if user.role != "admin":

|

||||

raise HTTPException(

|

||||

status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED

|

||||

)

|

||||

else:

|

||||

raise HTTPException(status_code=401, detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

headers.pop("Host", None)

|

||||

headers.pop("Authorization", None)

|

||||

headers.pop("Origin", None)

|

||||

headers.pop("Referer", None)

|

||||

|

||||

session = aiohttp.ClientSession()

|

||||

response = None

|

||||

try:

|

||||

response = await session.request(

|

||||

request.method, target_url, data=body, headers=headers

|

||||

)

|

||||

|

||||

print(response)

|

||||

if not response.ok:

|

||||

data = await response.json()

|

||||

print(data)

|

||||

response.raise_for_status()

|

||||

|

||||

async def generate():

|

||||

async for line in response.content:

|

||||

print(line)

|

||||

yield line

|

||||

await session.close()

|

||||

|

||||

return StreamingResponse(generate(), response.status)

|

||||

|

||||

except Exception as e:

|

||||

print(e)

|

||||

error_detail = "Ollama WebUI: Server Connection Error"

|

||||

|

||||

if response is not None:

|

||||

try:

|

||||

res = await response.json()

|

||||

if "error" in res:

|

||||

error_detail = f"Ollama: {res['error']}"

|

||||

except:

|

||||

error_detail = f"Ollama: {e}"

|

||||

|

||||

await session.close()

|

||||

raise HTTPException(

|

||||

status_code=response.status if response else 500,

|

||||

detail=error_detail,

|

||||

)

|

||||

143

backend/apps/openai/main.py

Normal file

143

backend/apps/openai/main.py

Normal file

|

|

@ -0,0 +1,143 @@

|

|||

from fastapi import FastAPI, Request, Response, HTTPException, Depends

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

from fastapi.responses import StreamingResponse, JSONResponse

|

||||

|

||||

import requests

|

||||

import json

|

||||

from pydantic import BaseModel

|

||||

|

||||

from apps.web.models.users import Users

|

||||

from constants import ERROR_MESSAGES

|

||||

from utils.utils import decode_token, get_current_user

|

||||

from config import OPENAI_API_BASE_URL, OPENAI_API_KEY

|

||||

|

||||

app = FastAPI()

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=["*"],

|

||||

allow_credentials=True,

|

||||

allow_methods=["*"],

|

||||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

app.state.OPENAI_API_BASE_URL = OPENAI_API_BASE_URL

|

||||

app.state.OPENAI_API_KEY = OPENAI_API_KEY

|

||||

|

||||

|

||||

class UrlUpdateForm(BaseModel):

|

||||

url: str

|

||||

|

||||

|

||||

class KeyUpdateForm(BaseModel):

|

||||

key: str

|

||||

|

||||

|

||||

@app.get("/url")

|

||||

async def get_openai_url(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OPENAI_API_BASE_URL": app.state.OPENAI_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.post("/url/update")

|

||||

async def update_openai_url(form_data: UrlUpdateForm,

|

||||

user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

app.state.OPENAI_API_BASE_URL = form_data.url

|

||||

return {"OPENAI_API_BASE_URL": app.state.OPENAI_API_BASE_URL}

|

||||

else:

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.get("/key")

|

||||

async def get_openai_key(user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

return {"OPENAI_API_KEY": app.state.OPENAI_API_KEY}

|

||||

else:

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.post("/key/update")

|

||||

async def update_openai_key(form_data: KeyUpdateForm,

|

||||

user=Depends(get_current_user)):

|

||||

if user and user.role == "admin":

|

||||

app.state.OPENAI_API_KEY = form_data.key

|

||||

return {"OPENAI_API_KEY": app.state.OPENAI_API_KEY}

|

||||

else:

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

|

||||

|

||||

@app.api_route("/{path:path}", methods=["GET", "POST", "PUT", "DELETE"])

|

||||

async def proxy(path: str, request: Request, user=Depends(get_current_user)):

|

||||

target_url = f"{app.state.OPENAI_API_BASE_URL}/{path}"

|

||||

print(target_url, app.state.OPENAI_API_KEY)

|

||||

|

||||

if user.role not in ["user", "admin"]:

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.ACCESS_PROHIBITED)

|

||||

if app.state.OPENAI_API_KEY == "":

|

||||

raise HTTPException(status_code=401,

|

||||

detail=ERROR_MESSAGES.API_KEY_NOT_FOUND)

|

||||

|

||||

body = await request.body()

|

||||

# headers = dict(request.headers)

|

||||

# print(headers)

|

||||

|

||||

headers = {}

|

||||

headers["Authorization"] = f"Bearer {app.state.OPENAI_API_KEY}"

|

||||

headers["Content-Type"] = "application/json"

|

||||

|

||||

try:

|

||||

r = requests.request(

|

||||

method=request.method,

|

||||

url=target_url,

|

||||

data=body,

|

||||

headers=headers,

|

||||

stream=True,

|

||||

)

|

||||

|

||||

r.raise_for_status()

|

||||

|

||||

# Check if response is SSE

|

||||

if "text/event-stream" in r.headers.get("Content-Type", ""):

|

||||

return StreamingResponse(

|

||||

r.iter_content(chunk_size=8192),

|

||||

status_code=r.status_code,

|

||||

headers=dict(r.headers),

|

||||

)

|

||||

else:

|

||||

# For non-SSE, read the response and return it

|

||||

# response_data = (

|

||||

# r.json()

|

||||

# if r.headers.get("Content-Type", "")

|

||||

# == "application/json"

|

||||

# else r.text

|

||||

# )

|

||||

|

||||

response_data = r.json()

|

||||

|

||||

print(type(response_data))

|

||||

|

||||

if "openai" in app.state.OPENAI_API_BASE_URL and path == "models":

|

||||

response_data["data"] = list(

|

||||

filter(lambda model: "gpt" in model["id"],

|

||||

response_data["data"]))

|

||||

|

||||

return response_data

|

||||

except Exception as e:

|

||||

print(e)

|

||||

error_detail = "Ollama WebUI: Server Connection Error"

|

||||

if r is not None:

|

||||

try:

|

||||

res = r.json()

|

||||

if "error" in res:

|

||||

error_detail = f"External: {res['error']}"

|

||||

except:

|

||||

error_detail = f"External: {e}"

|

||||

|

||||

raise HTTPException(status_code=r.status_code, detail=error_detail)

|

||||

|

|

@ -1,13 +1,16 @@

|

|||

from fastapi import FastAPI, Request, Depends, HTTPException

|

||||

from fastapi import FastAPI, Depends

|

||||

from fastapi.routing import APIRoute

|

||||

from fastapi.middleware.cors import CORSMiddleware

|

||||

|

||||

from apps.web.routers import auths, users, chats, modelfiles, utils

|

||||

from apps.web.routers import auths, users, chats, modelfiles, prompts, configs, utils

|

||||

from config import WEBUI_VERSION, WEBUI_AUTH

|

||||

|

||||

app = FastAPI()

|

||||

|

||||

origins = ["*"]

|

||||

|

||||

app.state.ENABLE_SIGNUP = True

|

||||

app.state.DEFAULT_MODELS = None

|

||||

|

||||

app.add_middleware(

|

||||

CORSMiddleware,

|

||||

allow_origins=origins,

|

||||

|

|

@ -16,16 +19,23 @@ app.add_middleware(

|

|||

allow_headers=["*"],

|

||||

)

|

||||

|

||||

|

||||

app.include_router(auths.router, prefix="/auths", tags=["auths"])

|

||||

app.include_router(users.router, prefix="/users", tags=["users"])

|

||||

app.include_router(chats.router, prefix="/chats", tags=["chats"])

|

||||

app.include_router(modelfiles.router, prefix="/modelfiles", tags=["modelfiles"])

|

||||

|

||||

app.include_router(modelfiles.router,

|

||||

prefix="/modelfiles",

|

||||

tags=["modelfiles"])

|

||||

app.include_router(prompts.router, prefix="/prompts", tags=["prompts"])

|

||||

|

||||

app.include_router(configs.router, prefix="/configs", tags=["configs"])

|

||||

app.include_router(utils.router, prefix="/utils", tags=["utils"])

|

||||

|

||||

|

||||

@app.get("/")

|

||||

async def get_status():

|

||||

return {"status": True, "version": WEBUI_VERSION, "auth": WEBUI_AUTH}

|

||||

return {

|

||||

"status": True,

|

||||

"version": WEBUI_VERSION,

|

||||

"auth": WEBUI_AUTH,

|

||||

"default_models": app.state.DEFAULT_MODELS,

|

||||

}

|

||||

|

|

|

|||

|

|

@ -4,7 +4,6 @@ import time

|

|||

import uuid

|

||||

from peewee import *

|

||||

|

||||

|

||||

from apps.web.models.users import UserModel, Users

|

||||

from utils.utils import (

|

||||

verify_password,

|

||||

|

|

@ -123,6 +122,15 @@ class AuthsTable:

|

|||

except:

|

||||

return False

|

||||

|

||||

def update_email_by_id(self, id: str, email: str) -> bool:

|

||||

try:

|

||||

query = Auth.update(email=email).where(Auth.id == id)

|

||||

result = query.execute()

|

||||

|

||||

return True if result == 1 else False

|

||||

except:

|

||||

return False

|

||||

|

||||

def delete_auth_by_id(self, id: str) -> bool:

|

||||

try:

|

||||

# Delete User

|

||||

|

|

|

|||

|

|

@ -3,14 +3,12 @@ from typing import List, Union, Optional

|

|||

from peewee import *

|

||||

from playhouse.shortcuts import model_to_dict

|

||||

|

||||

|

||||

import json

|

||||

import uuid

|

||||

import time

|

||||

|

||||

from apps.web.internal.db import DB

|

||||

|

||||

|

||||

####################

|

||||

# Chat DB Schema

|

||||

####################

|

||||

|

|

@ -62,23 +60,23 @@ class ChatTitleIdResponse(BaseModel):

|

|||

|

||||

|

||||

class ChatTable:

|

||||

|

||||

def __init__(self, db):

|

||||

self.db = db

|

||||

db.create_tables([Chat])

|

||||

|

||||

def insert_new_chat(self, user_id: str, form_data: ChatForm) -> Optional[ChatModel]:

|

||||

def insert_new_chat(self, user_id: str,

|

||||

form_data: ChatForm) -> Optional[ChatModel]:

|

||||

id = str(uuid.uuid4())

|

||||

chat = ChatModel(

|

||||

**{

|

||||

"id": id,

|

||||

"user_id": user_id,

|

||||

"title": form_data.chat["title"]

|

||||

if "title" in form_data.chat

|

||||

else "New Chat",

|

||||

"title": form_data.chat["title"] if "title" in

|

||||

form_data.chat else "New Chat",

|

||||

"chat": json.dumps(form_data.chat),

|

||||

"timestamp": int(time.time()),

|

||||

}

|

||||

)

|

||||

})

|

||||

|

||||

result = Chat.create(**chat.model_dump())

|

||||

return chat if result else None

|

||||

|

|

@ -111,27 +109,25 @@ class ChatTable:

|

|||

except:

|

||||

return None

|

||||

|

||||

def get_chat_lists_by_user_id(

|

||||

self, user_id: str, skip: int = 0, limit: int = 50

|

||||

) -> List[ChatModel]:

|

||||

def get_chat_lists_by_user_id(self,

|

||||

user_id: str,

|

||||

skip: int = 0,

|

||||

limit: int = 50) -> List[ChatModel]:

|

||||

return [

|

||||

ChatModel(**model_to_dict(chat))

|

||||

for chat in Chat.select()

|

||||

.where(Chat.user_id == user_id)

|

||||

.order_by(Chat.timestamp.desc())

|

||||

ChatModel(**model_to_dict(chat)) for chat in Chat.select().where(

|

||||

Chat.user_id == user_id).order_by(Chat.timestamp.desc())

|

||||

# .limit(limit)

|

||||

# .offset(skip)

|

||||

]

|

||||

|

||||

def get_all_chats_by_user_id(self, user_id: str) -> List[ChatModel]:

|

||||

return [

|

||||

ChatModel(**model_to_dict(chat))

|

||||

for chat in Chat.select()

|

||||

.where(Chat.user_id == user_id)

|

||||

.order_by(Chat.timestamp.desc())

|

||||

ChatModel(**model_to_dict(chat)) for chat in Chat.select().where(

|

||||

Chat.user_id == user_id).order_by(Chat.timestamp.desc())

|

||||

]

|

||||

|

||||

def get_chat_by_id_and_user_id(self, id: str, user_id: str) -> Optional[ChatModel]:

|

||||

def get_chat_by_id_and_user_id(self, id: str,

|

||||

user_id: str) -> Optional[ChatModel]:

|

||||

try:

|

||||

chat = Chat.get(Chat.id == id, Chat.user_id == user_id)

|

||||

return ChatModel(**model_to_dict(chat))

|

||||

|

|

@ -146,7 +142,8 @@ class ChatTable:

|

|||

|

||||

def delete_chat_by_id_and_user_id(self, id: str, user_id: str) -> bool:

|

||||

try:

|

||||

query = Chat.delete().where((Chat.id == id) & (Chat.user_id == user_id))

|

||||

query = Chat.delete().where((Chat.id == id)

|

||||

& (Chat.user_id == user_id))

|

||||

query.execute() # Remove the rows, return number of rows removed.

|

||||

|

||||

return True

|

||||

|

|

|

|||

|

|

@ -12,7 +12,7 @@ from apps.web.internal.db import DB

|

|||

import json

|

||||

|

||||

####################

|

||||

# User DB Schema

|

||||

# Modelfile DB Schema

|

||||

####################

|

||||

|

||||

|

||||

|

|

@ -58,13 +58,14 @@ class ModelfileResponse(BaseModel):

|

|||

|

||||

|

||||

class ModelfilesTable:

|

||||

|

||||

def __init__(self, db):

|

||||

self.db = db

|

||||

self.db.create_tables([Modelfile])

|

||||

|

||||

def insert_new_modelfile(

|

||||

self, user_id: str, form_data: ModelfileForm

|

||||

) -> Optional[ModelfileModel]:

|

||||

self, user_id: str,

|

||||

form_data: ModelfileForm) -> Optional[ModelfileModel]:

|

||||

if "tagName" in form_data.modelfile:

|

||||

modelfile = ModelfileModel(

|

||||

**{

|

||||

|

|

@ -72,8 +73,7 @@ class ModelfilesTable:

|

|||

"tag_name": form_data.modelfile["tagName"],

|

||||

"modelfile": json.dumps(form_data.modelfile),

|

||||

"timestamp": int(time.time()),

|

||||

}

|

||||

)

|

||||

})

|

||||

|

||||

try:

|

||||

result = Modelfile.create(**modelfile.model_dump())

|

||||

|

|

@ -87,28 +87,29 @@ class ModelfilesTable:

|

|||

else:

|

||||

return None

|

||||

|

||||

def get_modelfile_by_tag_name(self, tag_name: str) -> Optional[ModelfileModel]:

|

||||

def get_modelfile_by_tag_name(self,

|

||||

tag_name: str) -> Optional[ModelfileModel]:

|

||||

try:

|

||||

modelfile = Modelfile.get(Modelfile.tag_name == tag_name)

|

||||

return ModelfileModel(**model_to_dict(modelfile))

|

||||

except:

|

||||

return None

|

||||

|

||||

def get_modelfiles(self, skip: int = 0, limit: int = 50) -> List[ModelfileResponse]:

|

||||

def get_modelfiles(self,

|

||||

skip: int = 0,

|

||||

limit: int = 50) -> List[ModelfileResponse]:

|

||||

return [

|

||||

ModelfileResponse(

|

||||

**{

|

||||

**model_to_dict(modelfile),

|

||||

"modelfile": json.loads(modelfile.modelfile),

|

||||

}

|

||||

)

|

||||

for modelfile in Modelfile.select()

|

||||

"modelfile":

|

||||

json.loads(modelfile.modelfile),

|

||||

}) for modelfile in Modelfile.select()

|

||||

# .limit(limit).offset(skip)

|

||||

]

|

||||

|

||||

def update_modelfile_by_tag_name(

|

||||

self, tag_name: str, modelfile: dict

|

||||

) -> Optional[ModelfileModel]:

|

||||

self, tag_name: str, modelfile: dict) -> Optional[ModelfileModel]:

|

||||

try:

|

||||

query = Modelfile.update(

|

||||

modelfile=json.dumps(modelfile),

|

||||

|

|

|

|||

115

backend/apps/web/models/prompts.py

Normal file

115

backend/apps/web/models/prompts.py

Normal file

|

|

@ -0,0 +1,115 @@

|

|||

from pydantic import BaseModel

|

||||

from peewee import *

|

||||

from playhouse.shortcuts import model_to_dict

|

||||

from typing import List, Union, Optional

|

||||

import time

|

||||

|

||||

from utils.utils import decode_token

|

||||

from utils.misc import get_gravatar_url

|

||||

|

||||

from apps.web.internal.db import DB

|

||||

|

||||

import json

|

||||

|

||||

####################

|

||||

# Prompts DB Schema

|

||||

####################

|

||||

|

||||

|

||||

class Prompt(Model):

|

||||

command = CharField(unique=True)

|

||||

user_id = CharField()

|

||||

title = CharField()

|

||||

content = TextField()

|

||||

timestamp = DateField()

|

||||

|

||||

class Meta:

|

||||

database = DB

|

||||

|

||||

|

||||

class PromptModel(BaseModel):

|

||||

command: str

|

||||

user_id: str

|

||||

title: str

|

||||

content: str

|

||||

timestamp: int # timestamp in epoch

|

||||

|

||||

|

||||

####################

|

||||

# Forms

|

||||

####################

|

||||

|

||||

|

||||

class PromptForm(BaseModel):

|

||||

command: str

|

||||

title: str

|

||||

content: str

|

||||

|

||||

|

||||

class PromptsTable:

|

||||

|

||||

def __init__(self, db):

|

||||

self.db = db

|

||||

self.db.create_tables([Prompt])

|

||||

|

||||

def insert_new_prompt(self, user_id: str,

|

||||

form_data: PromptForm) -> Optional[PromptModel]:

|

||||

prompt = PromptModel(

|

||||

**{

|

||||

"user_id": user_id,

|

||||

"command": form_data.command,

|

||||

"title": form_data.title,

|

||||

"content": form_data.content,

|

||||

"timestamp": int(time.time()),

|

||||

})

|

||||

|

||||

try:

|

||||

result = Prompt.create(**prompt.model_dump())

|

||||

if result:

|

||||

return prompt

|

||||

else:

|

||||

return None

|

||||

except:

|

||||

return None

|

||||

|

||||

def get_prompt_by_command(self, command: str) -> Optional[PromptModel]:

|

||||

try:

|

||||

prompt = Prompt.get(Prompt.command == command)

|

||||

return PromptModel(**model_to_dict(prompt))

|

||||

except:

|

||||

return None

|

||||

|

||||

def get_prompts(self) -> List[PromptModel]:

|

||||

return [

|

||||

PromptModel(**model_to_dict(prompt)) for prompt in Prompt.select()

|

||||

# .limit(limit).offset(skip)

|

||||

]

|

||||

|